Subscribe to EDOM TECH Newsletter

2023/02/23

CXL Memory Expansion and Pooling for Cloud Servers

CXL Memory Expansion and Pooling for Cloud Servers

Our personal and professional lives create and consume large amounts of information, while compute-intensive AI and ML workloads have been hampered by memory bottlenecks. In the traditional structure, memory is directly connected to a CPU or GPU and cannot be shared by other CPUs or GPUs. Despite DRAM accounts for up to 50% of server costs, up to 25% of memory is stranded at any given time. Cloud service providers need to develop new architectures to store, analyze and deliver the growing volume of data.

Compute Express Link (CXL) is an open standard for processors, accelerators, and memory expansion. CXL memory controllers enable memory expansion, pooling and sharing solutions to reduce total cost of ownership (TCO) by increasing memory utilization, flexibility and availability. CXL memory controllers are built for data center to unlock application-specific workloads in AI training and inference, machine learning, in-memory databases, memory tiering, and multi-tenant use-cases.

Compute Express Link (CXL) is an open standard for processors, accelerators, and memory expansion. CXL memory controllers enable memory expansion, pooling and sharing solutions to reduce total cost of ownership (TCO) by increasing memory utilization, flexibility and availability. CXL memory controllers are built for data center to unlock application-specific workloads in AI training and inference, machine learning, in-memory databases, memory tiering, and multi-tenant use-cases.

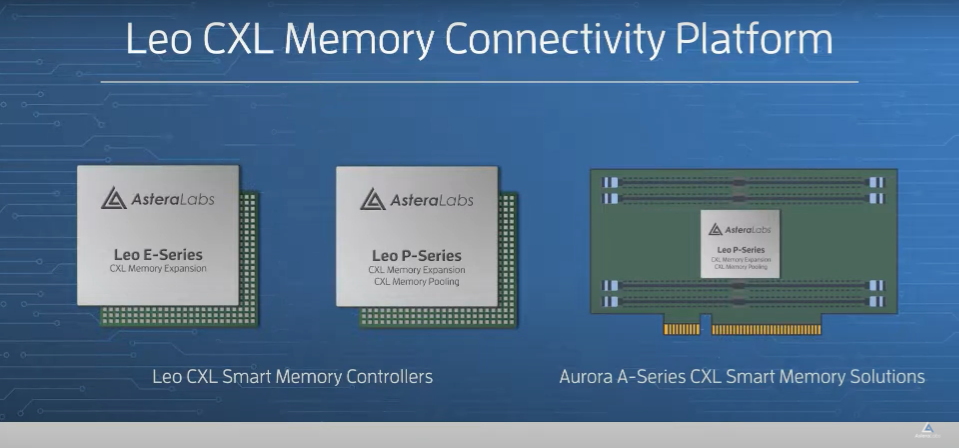

Astera Labs - Leo CXL Memory Connectivity Platform

Astera Labs Leo Memory Connectivity Platform is a solution supporting memory expansion, pooling, and sharing to eliminate memory bottlenecks and decrease TCO. Leo Controllers have features including server-grade RAS features, end-to-end security, fleet management, low-latency DDRx, and seamless interoperability with all major CPU, GPU, and memory vendors.

Related Products

-

A100 80GB PCIe GPU | NVIDIA

A100 80GB PCIe GPU | NVIDIA

The NVIDIA® A100 80GB PCIe card delivers unprecedented acceleration to power the world’s highest-performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. (Learn More) -

2-Mbit I2C EEPROM | STMicroelectronics

2-Mbit I2C EEPROM | STMicroelectronics

The M24M02 is a 2-Mbit I2C-compatible EEPROM organized as 256 K × 8 bits and it can operate with a supply voltage from 1.8 V to 5.5 V, over an ambient temperature range of –40 °C / +85 °C. (Learn More)

Article Source:

1. Astera Labs Article - Unlocking Cloud Server Performance with CXL

2. Astera Labs Enters Pre-Production Phase of Leo Memory Connectivity Platform for CXL-Attached Memory Expansion and Pooling